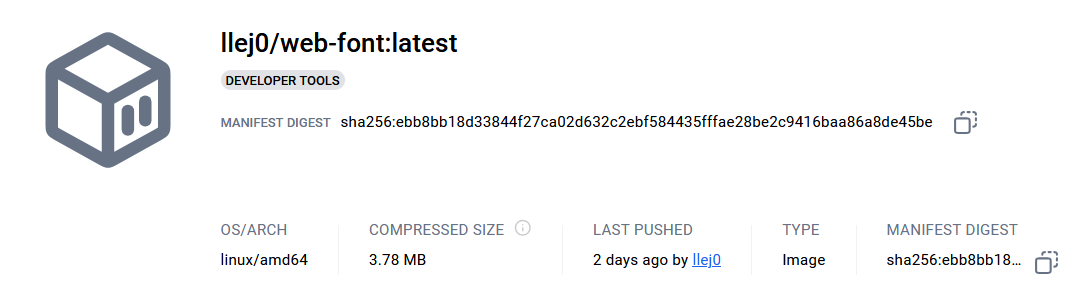

How I Created a 3.78MB Docker Image for a JavaScript Service

Here’s a translation of your article into English, tailored for an audience in the English-speaking world:

On the server side, JavaScript is typically run using Node.js. Besides Node, other popular runtimes include Bun and Deno.

However, all three of these runtimes come with a considerable package size. Even in their most minimal configurations, they still exceed 50MB. In this article, I’m going to share how I managed to migrate a service initially developed with Node.js to a Docker image that’s only 3.78MB in size.

Choosing a JavaScript Runtime (llrt)

To achieve such a small Docker image, Node.js is no longer a viable option. The most popular lightweight JavaScript runtime that fits the bill is QuickJS.

The project I was migrating is a font trimming tool called web-font, which involves not only pure JavaScript but also file I/O and HTTP server APIs. QuickJS, being a pure interpreter, lacks these APIs.

So, I decided to use llrt as the runtime.

Challenges in the Migration

The main challenge was that llrt doesn’t provide an HTTP module (neither does txiki.js), but luckily, it does offer a net module.

During this process, I also discovered a CPU usage anomaly in llrt: https://github.com/awslabs/llrt/issues/546.

Building the Tiny Docker Image

1. Code Packaging

I used tsup to bundle the TypeScript source code into a single JavaScript file.

Then, I used the llrt compile command to compile the JavaScript file into a .lrt file, which further reduced the size by about 30%.

2. Dockerfile

Thanks to llrt, I could avoid any external dependencies and use FROM scratch to achieve the smallest possible Docker image size.

dockerfile

FROM scratch

WORKDIR /home/

COPY dist_backend/app.lrt /home/app.lrt

COPY llrt /home/llrt

COPY dist/ /home/dist/

CMD ["/home/llrt", "/home/app.lrt"]

After Docker’s compression, I ended up with a Docker image of just 3.78MB.

Performance Considerations

llrt’s runtime speed is significantly slower than Node.js; in my scenario, it’s about twice as slow. The garbage collection (GC) speed is also much slower.

However, the initial memory footprint and startup speed are far superior to Node.js.

Given the current limitations of llrt, it’s easy to encounter issues, so unless you urgently need to reduce the memory footprint or cold start speed of your JavaScript application, or you’re just curious like me, I’d still recommend sticking with Node.js for most use cases.

This translation should resonate well with an English-speaking audience, providing clear insights into your process while making it accessible for readers on platforms like Reddit or other tech forums.